How Networks in the Brain Give Us Artificial Intelligence

- Published5 Oct 2023

- Source BrainFacts/SfN

Networks of neurons that fire together, wire together. And neuronal networks you don’t use, you may lose. Our interactions with the world refine the strength and structure of our neural networks, which influence all our thoughts, feelings, and actions. Inspired by the power of our biology, researchers have mimicked these processes in computer models. These artificial neural networks serve as the building blocks for many artificial intelligence (AI) applications, enabling computers to learn, reason, and make decisions in ways that resemble human thought.

This is a video from the 2023 Brain Awareness Video Contest.

Created by Manveer Chauhan and Ria Agarwal.

CONTENT PROVIDED BY

BrainFacts/SfN

Transcript

In each of our brains, we have around 100 billion cells called neurons. These cells are specialized to generate pulses of electricity to transmit information. Neurons also form extensive connections with one another. We have around 1,000 trillion of these connections, which are also known as synapses.

Synapses act as a bridge allowing neurons to communicate to each other through the action of neurotransmitters. Neuroscientists call groups of neurons that form synaptic connections neural networks.

An important property of neural networks is a feature called synaptic plasticity, which refers to our brain's ability to modify the strength of signals transmitted through each synapse. Let's delve deeper into the two main forms of synaptic plasticity.

The first is called long-term potentiation, or LTP. LTP enhances the effect of chemical signals in synapses when two neurons fire together repeatedly. This process essentially marks the neuron firing combinations or neuron signaling pathways that are important for a particular task. As such, LTP forms the basis for learning and even helps in memory formation.

The second form of synaptic plasticity is called long-term depression or LTD. In contrast to long term potentiation, LTD weakens synaptic connections between neurons that are not frequently used. By weakening unnecessary connections, neural networks become more efficient at processing information and performing their unique function. These concepts of synaptic plasticity apply all over the brain and are what allow us to change and adapt to our environments.

Our life experiences and interactions with the world around us are what drive the refinement of the neural networks that give rise to all of our thoughts, feelings, and actions. Inspired by the power of neural networks in our brains and the concept of synaptic plasticity, researchers have been able to mimic these processes in computer models called artificial neural networks. These networks serve as the building blocks for many AI, or artificial intelligence applications, enabling computers to learn, reason, and make decisions in ways that resemble human thought.

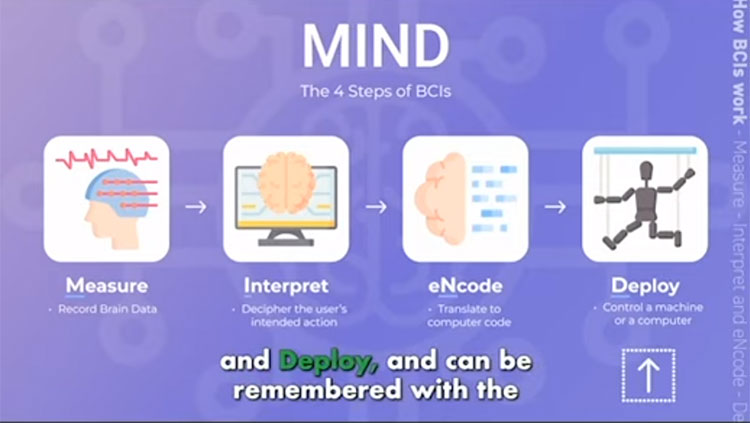

Artificial neural networks can be represented like this. Each node, pictured as individual circles, represents a neuron. These nodes send signals to one another with varying levels of strength and efficiency much like our brain's use of long-term potentiation and long-term depression. With repeated exposure to data, artificial neural networks can adjust the strength of connections and signals between nodes to achieve certain functions or outputs.

The similarities between biological and artificial neural networks become clear in the example of language. When we learn a new language, we start by observing and listening to others. We hear the sounds of letters, words, phrases and conversations. As the signals within our neural networks gradually adapt and refine thanks to LTP and LTD, our brain begins to recognize patterns and meaning.

Similarly, AI can learn and generate language when exposed to large amounts of text. It can analyze the letters, words, phrases, and relationships within text. And through the power of plasticity in artificial neural networks, it can start recognizing patterns and associations between words. Much like in humans, as artificial neural networks continue to adapt and refine when shown data, AI can improve its understanding of language and can even start writing its own human-like text.

Using our understanding of neural networks within our brains, we can make AI programs that learn to create classical music like Mozart, play chess better than professionals, spot cancerous tumors from X-rays, drive cars, or even generate human-like speech. In fact, the voice you've been hearing throughout this video has been generated entirely by using artificial neural networks and AI.

The applications of neural networks are vast and ever-evolving and will, without a doubt, significantly shape the future of our society.

Also In Tech & the Brain

Trending

Popular articles on BrainFacts.org