Taking a Human Centered Approach to Artificial Intelligence

- Published20 Nov 2020

- Author Courtney Columbus

- Source BrainFacts/SfN

The memory still makes Fei Fei Li smile: a group of high school girls, all from underrepresented populations, braiding each other’s hair and chatting about deep learning neural networks. The girls were participants in AI4ALL, a program Li created to introduce young people to the field of artificial intelligence.

“It was just so human, and so beautiful,” Li told her audience during the Dialogues Between Neuroscience and Society Lecture at Neuroscience 2019 in Chicago. She designed AI4ALL for future leaders in AI who might not have access to such an experience. “This technology is going to impact humanity’s future and everybody at the steering wheel looked the same.”

Concern for humanity guides Li’s human-centered approach to AI. Three principles comprise her vision for the future of the field: AI should be developed with a focus on human impact, AI should augment and enhance humanity, and AI should be inspired by human intelligence.

Li has been a driving force in AI since she developed ImageNet, a database of 14 million annotated images used by researchers around the globe to train visual object recognition software. Its images are divided into categories like “amphibian,” “food,” “flower,” or “geological formation.”

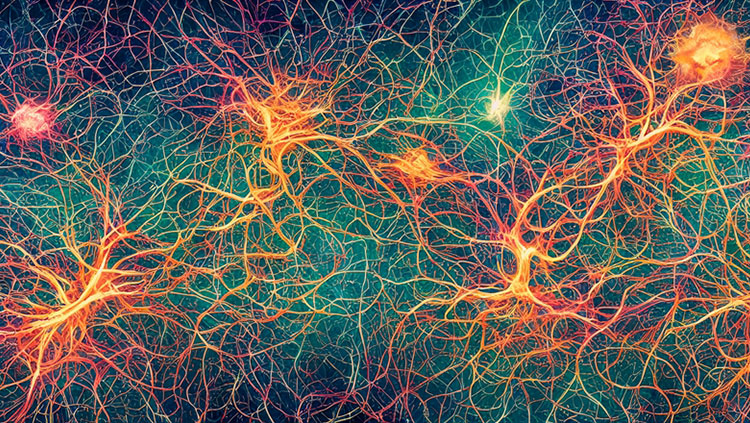

“You show the algorithm a bunch of training data with labels — pictures of cats or dogs or chairs — and then the algorithm learns to match the pattern it sees with the label it’s given,” Li explained. The more information the database contained, the better the algorithm could recognize and identify images. According to Li, the idea was to “reboot the thinking of computer vision and machine learning using big data to drive the learning of object categories.”

ImageNet’s success lay with its yearly challenge. From 2010 to 2017, researchers around the world pitted their best algorithms against ImageNet to determine which could recognize 1,000 different everyday objects with better accuracy. The competition got interesting in 2012 when Geoffrey Hinton and his students won using an old family of algorithms know as convolutional neural networks.

“That result brought the classification error down in a surprisingly significant way,” Li recalled. “Since then, the results have steadily improved.”

Convolutional neural networks sparked a boom in the field. Since then, AI has worked its way out of the lab and into society. “It has not only entered daily life but also is driving the fourth Industrial Revolution,” Li noted. Knowing it will — and already does — have a big effect on humanity, Li turned her attention to human-centered AI and the three principles informing it.

Li’s first principle holds that AI should be developed with a focus on the human impact. In keeping with that principle, Li is reevaluating algorithms and eliminating machine-learning bias. While designing or training a program, humans may introduce unintended biases or prejudices. That can cause the algorithm to produce systematically prejudiced results.

Li’s team is reviewing ImageNet’s data for machine-learning biases, specifically in the people categories. Recently, they identified offensive categories like racial or sexual characterizations and proposed removing them from the database. Li also pointed to researchers re-examining law enforcement data to make the decision-making part of the AI system fairer. “We can use machine learning to turn a document of law enforcement that carries race information through names, addresses, zip codes, and hair color, into a race blind document,” she explained.

Many people fear AI’s seeming power to one day take over the workforce or eliminate privacy. That’s where Li’s second principle comes in: AI must augment and enhance society. People can benefit from the way AI is deployed, for example by improving the limitations of the healthcare system.

Every year in the United States, more than 90,000 people die from hospital-acquired infections. Good hand hygiene is critically important to reducing these types of infections. So much so that to track clinicians’ hygiene practices, “hospitals hire human monitors,” Li noted. “You can imagine how expensive, erroneous, and subjective this process is.”

Even with all that effort, poor hand hygiene remains a common problem in hospitals. Li’s team designed a system using sensor technology and algorithms similar to those in self-driving cars. They recognized that an environment where humans are taking care of other humans presented significant complexity. The system created a three-dimensional reconstruction of the hospital and people’s activities. This technology can monitor for medical errors and hygiene practices, and alert doctors when they need to pay attention to hygiene practices. Whereas a person with a clipboard will get tired of the task, the AI system won’t fatigue.

Unlike those human monitors, however, AI doesn’t think like us. Li holds that AI should be inspired by human intelligence. For example, while a computer can identify the components in a picture, it can’t see the full context. Li explained, “You and I see a whole different story [in a picture]. We see emotion. We might predict what's going to happen next.”

To develop AI that processes information more like we do, Li works with a large team of researchers that includes, among others, Dan Yamins, a professor of computer science and psychology, and Michael Frank, who studies language learning in children. The goal is to create machines that learn like humans.

“Human intelligence doesn’t develop by someone [showing babies] 1,000 objects with labels,” said Li. “Babies explore, they break things, they’re driven by curiosity. We developed an AI system that’s intrinsically motivated by curiosity.”

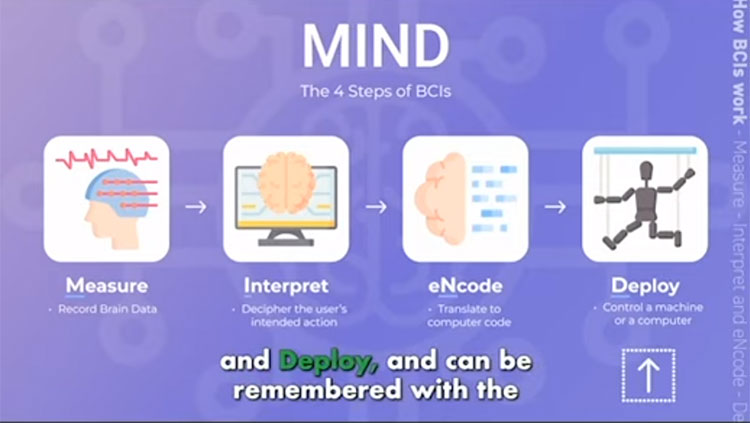

The team has imbued a computer algorithm, or artificial agent, with curiosity — a drive to seek novelty and explore its environment. Modeled as a baby silhouette in what looks a bit like a low-quality video game, the artificial agent first learns to swivel its head and look around. To compare the artificial agent to real humans, Frank has been working with preschool children and observing how they learn.

“There’s a lot of AI out there that’s inspired generally by human kids and human learning, but there’s much less that’s been evaluated systematically with respect to whether its behavior looks like how kids learn. That’s what we’re doing,” Frank says. “We're all hoping that this kind of exploratory and curious behavior might prove to be a really important driver of the synthesis between AI and cognitive science.”

Early results show the artificial agent “goes through an early stage of understanding and then starts to focus on objects without anybody telling it to,” said Li. In other words, it seems to behave like a human baby. The team is exploring how to use some of the algorithm’s principles in robots.

Today’s robots are very good at doing one task over and over again, but when it comes to uncertain environments, they have difficulty. A curiosity-imbued algorithm may allow them to navigate their environments with more flexibility. Yamins points out the information generated from the AI algorithm could also improve our understanding of the human brain and cognition.

At one point in her talk, Li shared an Albert Einstein quote with her audience: “It has become appallingly obvious that our technology has exceeded our humanity.”

“The most important word or reminder Einstein has for us is the word humanity,” said Li, and she carries that reminder throughout her research, as she ushers in a new era of AI.

Additional reporting and writing by Hannah Zuckerman

CONTENT PROVIDED BY

BrainFacts/SfN

References

Princeton University, Engineering School. (2020, February 14). Researchers devise approach to reduce biases in computer vision data sets. ScienceDaily. Retrieved October 29, 2020 from www.sciencedaily.com/releases/2020/02/200214105246.htm

Eye On A.I.: Episode 44 - Fei-Fei Li. (n.d.). Retrieved October 29, 2020, from https://aneyeonai.libsyn.com/episode-44-fei-fei-li

Dialogues Between Neuroscience and Society: Fei-Fei Li. (2019). Retrieved from https://www.youtube.com/watch?v=xODWJzwGKb0

Also In Tech & the Brain

Trending

Popular articles on BrainFacts.org